服务器处理客户端请求的设计方法

1、一请求一线程

客户端向服务器发送请求,当socketfd响应后,如果未发送数据,新的客户端再连接也只能连接socketfd,发送的数据内容不会被处理,所以需要将不同客户端对应创建不同线程处理。

首先编写声明:

#include然后对线程操作子函数编写:

客户端断开时候recv返回0。所以对于count==0判断是否关闭。

void *client_thread(void *arg)

{

int clientfd = *(int*)arg;

while(1)

{

char buffer[1024] = {0};

int count = recv(clientfd,buffer,1024,0);

if(count == 0) //disconnect

{

printf("client disconnect:%d

",clientfd);

close(clientfd);

break;

}

printf("RECV:%s

",buffer);

count = send(clientfd,buffer,count,0);

printf("SEND:%d

",count);

}

}

在主函数里面先建立socketfd连接,再recv数据:

客户端和服务端连接的io就是fd。

fd是int型,初始0、1、2分别是标准输入、标准输出、标准错误。

建立第一个fd就是3,如果断开以后在60s(一般是这个时间)内再次连接,就会使得fd到下一位,例如本来有3、4、5,关闭4,再连接,就会出现6,但如果超过60s,就还是会在原来位置创建。

int main()

{

//创建网络通信通道。AF_INET=IPv4协议。SOCK_STREAM=TCP协议,SOCK_DGRAM=UDP协议。0默认协议的编号。

int sockfd = socket(AF_INET,SOCK_STREAM,0);

//初始化sockaddr_in结构体,配置监听和端口

struct sockaddr_in servaddr;

servaddr.sin_family = AF_INET;

servaddr.sin_addr.s_addr = htonl(INADDR_ANY); //0.0.0.0

servaddr.sin_port = htons(2000); //0-1023

//将通道绑定到指定地址

if(-1 == bind(sockfd,(struct sockaddr*)&servaddr,sizeof(struct sockaddr)))

{

printf("bind failed:%s

",strerror(errno));

}

//开始监听

listen(sockfd,10);

printf("listen finished:%d

",sockfd);

struct sockaddr_in clientaddr;

socklen_t len = sizeof(clientaddr);

#if 0

printf("accept

");

int clientfd = accept(sockfd,(struct sockaddr*)&clientaddr,&len);

printf("accept:finished

");

char buffer[1024] = {0};

int count = recv(clientfd,buffer,1024,0);

printf("RECV:%s

",buffer);

count = send(clientfd,buffer,count,0);

printf("SEND:%d

",count);

#else

while(1)

{

printf("accept

");

int clientfd = accept(sockfd,(struct sockaddr*)&clientaddr,&len);

printf("accept:finished

");

pthread_t thid;

pthread_create(&thid,NULL,client_thread,&clientfd);

}

#endif

getchar();

printf("exit

");

return 0;

}

代码简便,但是对于多客户端请求,会导致内存增加,难以满足需求。下面给出一种可以实现io多路复用的方法。

2、select使用

使用select监控所有socket,实现多路复用。

把之前一请求一线程main部分代码置0,加入下面代码实现select功能:

#else

fd_set rfds,rset; //fd集合

FD_ZERO(&rfds);

FD_SET(sockfd,&rfds); //设置fd

int maxfd = sockfd;

while(1)

{

rset = rfds;

int nready = select(maxfd+1,&rset,NULL,NULL,NULL);

//accept

if(FD_ISSET(sockfd,&rset))

{

int clientfd = accept(sockfd,(struct sockaddr*)&clientaddr,&len);

printf("accept:finished:&d

",clientfd);

FD_SET(clientfd,&rfds);

if(clientfd > maxfd) maxfd = clientfd;

}

//recv

int i = 0;

for(i = sockfd+1; i <= maxfd; i ++)

{

if(FD_ISSET(i,&rset))

{

char buffer[1024] = {0};

int count = recv(i,buffer,1024,0);

}

if(count == 0) //disconnect

{

printf("client disconnect:%d

",i);

close(i);

FD_CLR(i,&rfds);

continue;

}

printf("RECV:%s

",buffer);

count = send(i,buffer,count,0);

printf("SEND:%d

",count);

}

}

select实现了io多路复用的要求,但是参数太多,代码繁琐,下面介绍poll方法实现。

3、poll的使用

main函数把select部分置0,加入下面poll的使用。

#else

struct pollfd fds[1024] = {0};

fds[sockfd].fd = sockfd;

fds[sockfd].events = POLLIN; //可读

int maxfd = sockfd;

while(1)

{

int nready = poll(fds,maxfd+1,-1); //-1代表一直阻塞等待

if(fds[sockfd].events & POLLIN) //检查events是否被设置成POLLIN这个位

{

int clientfd = accept(sockfd,(struct sockaddr*)&clientaddr,&len);

printf("accept finished;%d

",clientfd);

fds[clientdf].fd = clientfd;

fds[clientfd].events = POLLIN;

if(clientfd > maxfd) maxfd = clientfd;

}

int i = 0;

for(i = sockfd+1; i<=maxfd; i++)

{

if(fds[i].revrnts & POLLIN)

{

char buffer[1024] = {0};

int count = recv(i,buffer,1024,0);

if(count == 0) //disconnect

{

printf("client disconnect:%d

",i);

close(i);

fds[i].fd = -1;

fds[i].events = 0;

continue;

}

}

}

}

4、epoll的使用

struct epoll_event

int epoll_create(int size);

epoll_ctl();

epoll_wait();

相对于select的全部复制下来、循环访问,epoll直接放入events,ctl监控sock,wait等待事件,出现以后ctl监控client,用完再删除client。

#else

int epfd = epoll_create(1); //建立一个fd

struct epoll_event ev;

ev.events = EPOLLIN;

ev.data.fd = sockfd;

epoll_ctl(epfd, EPOLL_CTL_ADD, sockfd, &ev);

while(1)

{

struct epoll_event events[1024] = {0};

int nready = epoll_wait(epfd, events, 1024, -1);

int i = 0;

for(i=0; i<nready; i++)

{

int connfd = events[i].data.fd;

if(connfd == sockfd)

{

int clientfd = accept(sockfd,(struct sockaddr*)&clientaddr,&len);

printf("accept finished;%d

",clientfd);

ev.events = EPOLLIN;

ev.data.fd = clientfd;

epoll_ctl(epfd, EPOLL_CTL_ADD, clientfd, &ev);

}

else if(events[i].events & EPOLLIN)

{

char buffer[1024] = {0};

int count = recv(connfd,buffer,1024,0);

if(count == 0) //disconnect

{

printf("client disconnect:%d

",connfd);

close(connfd);

epoll_ctl(epfd,EPOLL_CTL_DEL,connfd,&ev);

continue;

}

printf("RECV:%s

",buffer);

count = send(connfd,buffer,count,0);

printf("SEND:%s

",count);

}

}

}

5、reactor使用

io →event →callback

listenfd→EPOLLIN →accept_cb

clientfd→EPOLLIN →recv_cb

clientfd→EPOLLOUT→send_cb

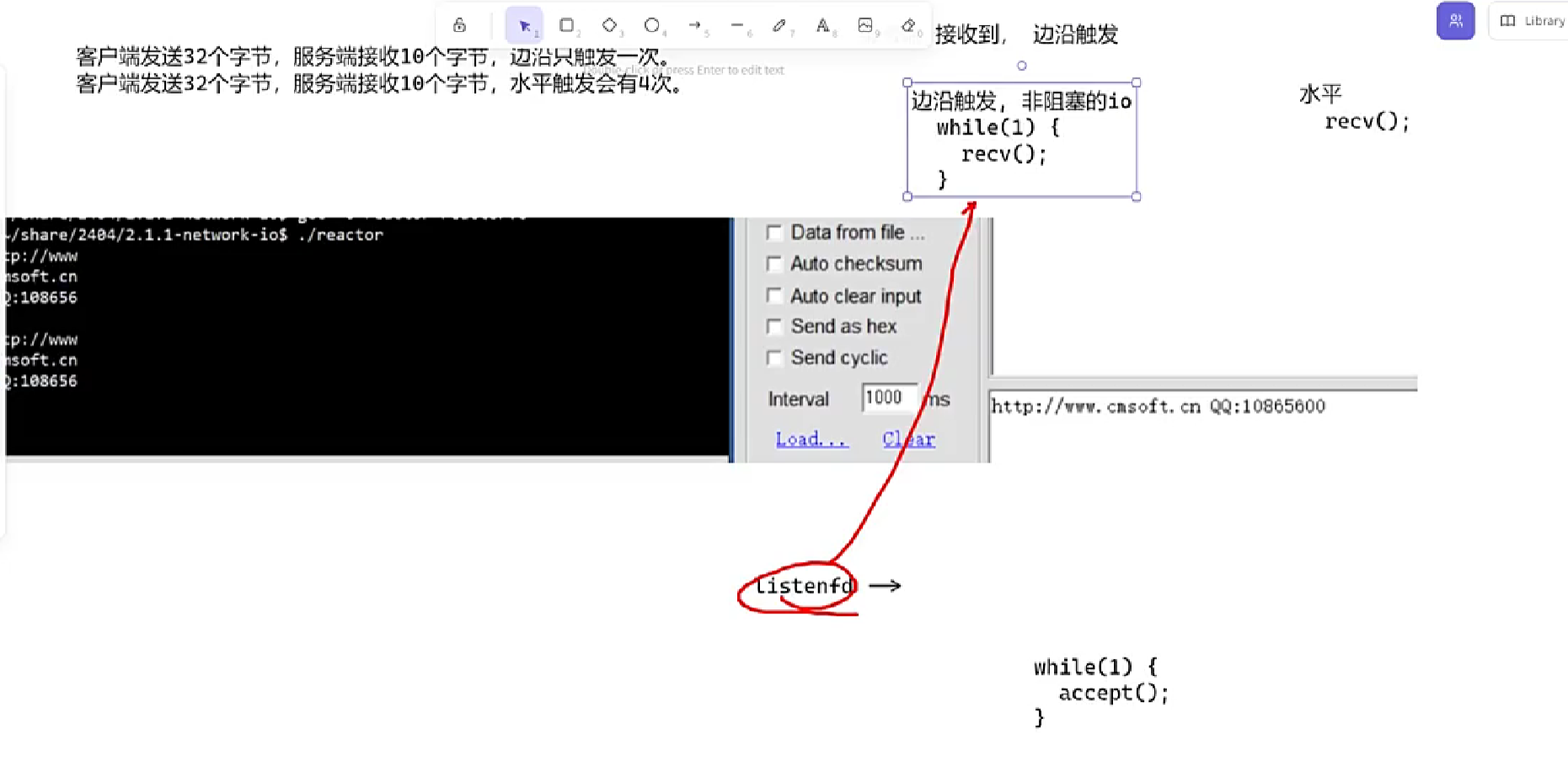

从io来看分为listenfd和clientfd两种,但是从事件event角度,分为EPOLLIN和EPOLLOUT两种。

reactor

1、event与callback的匹配

2、每一个io与之对应参数

所以reactor不会造成阻塞情况,他发送数据和接收数据不会在传输时候阻塞,这就是他的优点。

#include6、reactor如何使用

从传统io处理转变到事件处理

总结

①一请求一线程:

socket创建网络通道

bind绑定

listen监听

clientfd=accept建立连接

pthread_t定义线程ID pthread_create创建线程

recv从clientfd接收到buffer

send从buffer发送到clientfd

②select使用:

fd_set集合建立

FD_ZERO

FD_SET设置socket对应的位

select阻塞直到有一个文件描述符文件可读

FD_ISSET

FD_CLR

缺点:

每次调用需要把fd_set集合从用户空间复制到内核空间

每次遍历maxfd浪费时间

③poll使用:

struct pollfd fd[]结构体建立

fds[].fd和fds[].event

poll()阻塞等待

fds[].revent & POLLIN执行accept

fds[clientfd].revent &POLLIN执行recv

④epoll

epoll_create(1)

struct epoll_event ev建立事件结构体

ev.events ev.data.fd

epoll_ctl() 添加

accept

epoll_wait()

recv

send

epoll_ctl 删除

使用场景:

①一请求一线程(小连接量)

少量固定连接数据库连接池

金融交易系统(每笔需要赋值计算)

②select(中等连接量)

跨平台网络工具(文件传输工具)

嵌入式设备监控

③poll

中并发Linux服务器(中等连接量)

多种事件监控(支持优先级处理POLLPRI>POLLIN)

④epoll(大连接量)

Linux高并发服务器

实时通信系统

⑤reactor(大连接量、复杂业务逻辑)

高性能中间件

网络框架