C4.5算法建立决策树分类模型(原理+例题+解题思路+C代码)

1 题目

根据下表输入采用C4.5算法建立决策树分类模型

1、定义决策树数据结构

2、编写方法计算属性的信息增益率

3、选择节点分裂属性

4、建立决策树

5、对新的输入进行分类预测

2 原理

C4.5 决策树算法是 ID3 的改进型。它以信息增益率(Gain Ratio)作为节点划分标准,可有效避免偏向于取值较多的属性,具有更好的泛化性能。

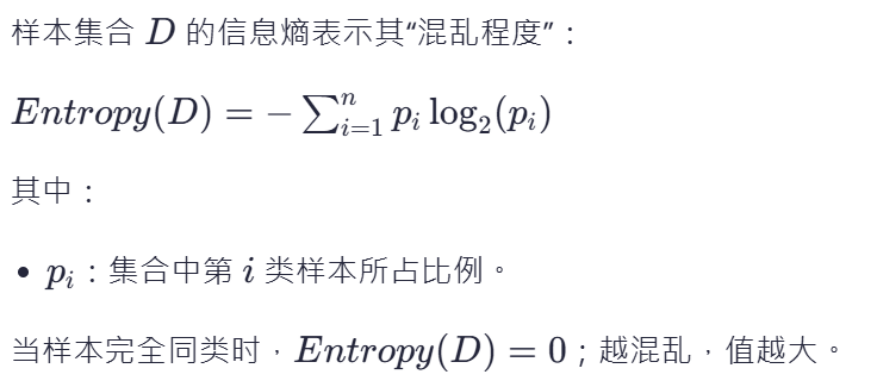

1. 信息熵的定义

3.信息增益与分裂信息

4.信息增益率

5.决策树生成过程

3 代码编写思路

1.定义数据结构与准备数据集

实验的第一步是抽象并构建用来存放训练数据与树节点的数据结构。决策树是一种典型的树形层次结构,而原始数据集可以被视为属性字段组成的二维表格。在 C 语言中,这种数据抽象通过 struct 结构体实现最为直观。

Sample 结构体精确地对应了每一条训练样本,记录天气数据集中的四个属性(Outlook、Temperature、Humidity、Windy)和一个标签(Play)。

Node 结构体则用于描述决策树中的一个节点。每个节点可能是叶节点(is_leaf=1),也可能是分裂节点(is_leaf=0),并通过 children[] 递归引用子节点,实现树的嵌套层次关系。

然后将天气数据集的14条样本以静态数组形式硬编码,方便直接运行测试。这种数组结构使得程序在设计时无需文件解析部分,重点集中于算法逻辑实现。

#define MAX_SAMPLES 20

#define MAX_ATTR 5

#define MAX_VALUE_LEN 20

#define MAX_RULE_LEN 256

#define MAX_RULES 50

//一、结构体定义

typedef struct Node

{ char attribute[MAX_VALUE_LEN];

char label[MAX_VALUE_LEN];

int is_leaf;

int branch_count;

char branch_values[MAX_SAMPLES][MAX_VALUE_LEN];

struct Node *children[MAX_SAMPLES];

} Node;

typedef struct

{ char Outlook[MAX_VALUE_LEN];

char Temperature[MAX_VALUE_LEN];

char Humidity[MAX_VALUE_LEN];

char Windy[MAX_VALUE_LEN];

char Play[MAX_VALUE_LEN];

} Sample;

// 二、训练数据集定义

Sample data[MAX_SAMPLES] =

{ {"sunny", "hot", "high", "false", "No"},

{"sunny", "hot", "high", "true", "No"},

{"overcast", "hot", "high", "false", "Yes"},

{"rain", "mild", "high", "false", "Yes"},

{"rain", "cool", "normal", "false", "Yes"},

{"rain", "cool", "normal", "true", "No"},

{"overcast", "cool", "normal", "true", "Yes"},

{"sunny", "mild", "high", "false", "No"},

{"sunny", "cool", "normal", "false", "Yes"},

{"rain", "mild", "normal", "false", "Yes"},

{"sunny", "mild", "normal", "true", "Yes"},

{"overcast", "mild", "high", "true", "Yes"},

{"overcast", "hot", "normal", "false", "Yes"},

{"rain", "mild", "high", "true", "No"}

};

int total_samples = 14;

char *attributes[] = {"Outlook", "Temperature", "Humidity", "Windy"};

int attr_count = 4;

2.信息熵与增益运算模块的实现

信息熵(Entropy)度量了样本集合的不确定性,是决策树划分判断的核心指标。为了构建决策树,需要实现一系列基于信息论的计算函数。entropy() 函数用于计算给定样本集的香农熵,以衡量其纯度。split_info() 计算属性的分裂信息,而 info_gain() 则计算属性的信息增益,并在需要时打印详细的子集熵、权重和贡献,以展示其内部计算逻辑。最后,gain_ratio() 函数通过信息增益和分裂信息计算信息增益率,这是 C4.5 算法选择最佳分裂属性的核心指标。

①字符串比较工具 equals():

为了提高代码的可读性并简化字符串比较操作,首先定义了一个简单的辅助函数 equals()。它通过封装 strcmp() 函数,将复杂的 strcmp(a, b) == 0 表达式简化为更符合自然语言习惯的 equals(a, b),使代码逻辑更加清晰。

int equals(const char *a, const char *b)

{ return strcmp(a, b) == 0;

}

/* ---------- 计算样本集合的熵 ---------- */

double entropy(Sample *data, int size)

{ if (size == 0) return 0.0;

int count_yes = 0, count_no = 0;

for (int i = 0; i < size; i++)

{ if (equals(data[i].Play, "Yes")) count_yes++;

else count_no++;

}

double p1 = (double)count_yes / size, p2 = (double)count_no / size;

double e = 0.0;

if (p1 > 0) e -= p1 * log2(p1);

if (p2 > 0) e -= p2 * log2(p2);

return e;

}

②信息熵计算函数 entropy():

信息熵是衡量样本集纯度的核心指标。entropy() 函数实现了香农熵公式 。它接收一个样本子集 data 及其大小 size 作为输入,首先遍历子集,统计出“Yes”和“No”两种标签的数量,然后计算出它们各自的比例 p1p1 和 p2p2。最后,根据公式计算并返回该子集的信息熵。当子集为空或完全纯净时,熵为 0。

/* ---------- 计算样本集合的熵 ---------- */

double entropy(Sample *data, int size)

{ if (size == 0) return 0.0;

int count_yes = 0, count_no = 0;

for (int i = 0; i < size; i++)

{ if (equals(data[i].Play, "Yes")) count_yes++;

else count_no++;

}

double p1 = (double)count_yes / size, p2 = (double)count_no / size;

double e = 0.0;

if (p1 > 0) e -= p1 * log2(p1);

if (p2 > 0) e -= p2 * log2(p2);

return e;

}

③分裂信息计算函数 split_info():

C4.5 算法使用信息增益率而非信息增益,是为了消除对具有多取值属性的偏好。split_info() 函数就是用于计算信息增益率公式中的分母——分裂信息(Split Information)。该函数首先统计指定属性 attr 的所有不同取值及其出现次数,然后根据各取值所占的比例计算并返回该属性的内在信息值。

/* ---------- 计算分裂信息(Split Info) ---------- */

double split_info(Sample *data, int size, char *attr)

{ if (size == 0) return 0;

char values[MAX_SAMPLES][MAX_VALUE_LEN];

int counts[MAX_SAMPLES] = {0}, value_count = 0;

for (int i = 0; i < size; i++)

{ char *val;

if (equals(attr, "Outlook")) val = data[i].Outlook;

else if (equals(attr, "Temperature")) val = data[i].Temperature;

else if (equals(attr, "Humidity")) val = data[i].Humidity;

else val = data[i].Windy;

int found = 0;

for (int j = 0; j < value_count; j++)

if (equals(values[j], val))

{ counts[j]++;

found = 1;

break;

}

if (!found)

{ strcpy(values[value_count], val);

counts[value_count++] = 1;

}

}

double si = 0;

for (int i = 0; i < value_count; i++)

{ double p = (double)counts[i] / size;

si -= p * log2(p);

}

return si;

}

④信息增益计算函数 info_gain():

信息增益(Information Gain)表示的是在得知某一属性的取值后,系统不确定性的减少量。info_gain() 函数实现了公式 Gain(A)=Entropy(D)−InfoA(D)Gain(A)=Entropy(D)−InfoA(D)。它首先调用 entropy() 计算整个数据集的总熵。

然后,它遍历指定属性 attr 的所有不同取值,将数据集划分为多个子集,并对每个子集递归调用 entropy() 计算其熵。最后,将这些子集熵进行加权求和,得到条件熵 InfoA(D)InfoA(D),并从总熵中减去它,得到信息增益。该函数还包含一个 print_detail 参数,用于控制是否打印详细的中间计算过程,极大地增强了算法的可解释性和调试便利性。

/* ---------- 计算信息增益(Info Gain) ---------- */

double info_gain(Sample *data, int size, char *attr, int print_detail)

{ double total_entropy = entropy(data, size);

char values[MAX_SAMPLES][MAX_VALUE_LEN];

int value_count = 0;

for (int i = 0; i < size; i++)

{ char *val;

if (equals(attr, "Outlook")) val = data[i].Outlook;

else if (equals(attr, "Temperature")) val = data[i].Temperature;

else if (equals(attr, "Humidity")) val = data[i].Humidity;

else val = data[i].Windy;

int found = 0;

for (int j = 0; j < value_count; j++)

if (equals(values[j], val))

{ found = 1;

break;

}

if (!found) strcpy(values[value_count++], val);

}

double weighted_entropy = 0;

if (print_detail)

printf(" 子集熵计算:

");

for (int i = 0; i < value_count; i++)

{ Sample subset[MAX_SAMPLES];

int subset_size = 0;

for (int j = 0; j < size; j++)

{ char *val;

if (equals(attr, "Outlook")) val = data[j].Outlook;

else if (equals(attr, "Temperature")) val = data[j].Temperature;

else if (equals(attr, "Humidity")) val = data[j].Humidity;

else val = data[j].Windy;

if (equals(val, values[i]))

subset[subset_size++] = data[j];

}

double subset_entropy = entropy(subset, subset_size);

double p = (double)subset_size / size;

weighted_entropy += p * subset_entropy;

if (print_detail)

printf(" %-10s: 熵=%.4f, 权重=%.2f, 贡献=%.4f

",

values[i], subset_entropy, p, p * subset_entropy);

}

if (print_detail)

{ printf(" Info_%s(D) = %.4f

", attr, weighted_entropy);

printf(" 信息增益计算: %.4f - %.4f = %.4f

",

total_entropy, weighted_entropy, total_entropy - weighted_entropy);

}

return total_entropy - weighted_entropy;

}

⑤信息增益率计算函数 gain_ratio()

gain_ratio() 函数是 C4.5 算法的核心决策函数。它将 info_gain() 和 split_info() 的计算结果组合起来,实现了信息增益率的计算:GainRatio(A)=Gain(A)SplitInfo(A)GainRatio(A)=SplitInfo(A)Gain(A)。通过调用前面已经封装好的模块,该函数的实现变得非常简洁。它首先获取信息增益 ig 和分裂信息 si,然后返回二者的比值。为了避免除以零的错误,当分裂信息为0时,直接返回0。这个函数最终的返回值将作为选择最佳分裂属性的依据。

/* ---------- 信息增益率 ---------- */

double gain_ratio(Sample *data, int size, char *attr)

{ double ig = info_gain(data, size, attr, 0);

double si = split_info(data, size, attr);

return (si == 0) ? 0 : ig / si;

}

3.递归构建决策树

在所有核心计算函数准备就绪后,实验进入最关键的步骤:构建决策树。这一过程通过一个核心的递归函数 build_tree() 来实现,完美体现了“分而治之”的算法思想。

(1)递归终止条件的判断

build_tree() 函数首先需要确定递归何时停止。C4.5 算法定义了两种主要的终止情况,对应代码中的两个辅助函数:

all_same_label(): 检查当前节点包含的样本是否已经完全属于同一类别。如果是,说明已经到达了一个“纯净”的叶节点,无需再进行划分。

attr_count == 0: 检查是否已经用尽了所有可供划分的属性。如果所有属性都已作为分裂节点使用过,即使样本集还不纯净,也必须停止划分,并根据当前样本中数量最多的类别(即“少数服从多数”原则)来创建一个叶节点。majority_label() 函数负责实现这一逻辑。

(2)核心递归逻辑:build_tree()

build_tree() 函数的执行流程如下:

①创建新节点:为当前子树的根节点动态分配内存。

②检查终止条件:调用上述辅助函数判断是否应创建叶节点。如果是,则标记该节点为叶(is_leaf = 1),赋予其标签,并返回该节点,结束当前分支的递归。

③选择最佳分裂属性:如果递归继续,则遍历所有尚未使用的属性,调用 gain_ratio() 函数计算每个属性的信息增益率,并记录下增益率最高的那个属性作为当前节点的最佳分裂属性。

④划分数据集与递归调用:确定最佳分裂属性后,程序会:

a. 遍历该属性的所有不同取值(如 Outlook 的 'sunny', 'overcast', 'rain')。

b. 对于每个取值,从当前数据集中筛选出所有匹配该值的样本,形成一个新的、更小的子集。

c. 从可用属性列表中移除刚刚使用过的分裂属性。

d. 携带这个新的样本子集和更新后的属性列表,递归调用 build_tree() 函数自身,并将返回的子树根节点连接到当前节点的 children 数组中。

这个过程会自顶向下不断重复,直到所有分支都满足终止条件,最终构建出一棵完整的决策树。

//四、决策树构建

int all_same_label(Sample *data, int size)

{ for (int i = 1; i < size; i++)

if (!equals(data[0].Play, data[i].Play))

return 0;

return 1;

}

const char *majority_label(Sample *data, int size)

{ int count_yes = 0;

for (int i = 0; i < size; i++)

if (equals(data[i].Play, "Yes"))

count_yes++;

return (count_yes >= size - count_yes) ? "Yes" : "No";

}

Node *build_tree(Sample *data, int size, char **attrs, int attr_count)

{ Node *node = (Node *)malloc(sizeof(Node));

memset(node, 0, sizeof(Node));

if (all_same_label(data, size) || attr_count == 0)

{ node->is_leaf = 1;

strcpy(node->label, all_same_label(data, size) ? data[0].Play : majority_label(data, size));

return node;

}

double best_gr = -1;

int best_attr = -1;

for (int i = 0; i < attr_count; i++)

{ double gr = gain_ratio(data, size, attrs[i]);

if (gr > best_gr)

{ best_gr = gr;

best_attr = i;

}

}

strcpy(node->attribute, attrs[best_attr]);

node->is_leaf = 0;

char values[MAX_SAMPLES][MAX_VALUE_LEN];

int value_count = 0;

for (int i = 0; i < size; i++)

{ char *val;

if (equals(attrs[best_attr], "Outlook")) val = data[i].Outlook;

else if (equals(attrs[best_attr], "Temperature")) val = data[i].Temperature;

else if (equals(attrs[best_attr], "Humidity")) val = data[i].Humidity;

else val = data[i].Windy;

int found = 0;

for (int j = 0; j < value_count; j++)

if (equals(values[j], val))

{ found = 1;

break;

}

if (!found) strcpy(values[value_count++], val);

}

for (int i = 0; i < value_count; i++)

{ Sample subset[MAX_SAMPLES];

int subset_size = 0;

for (int j = 0; j < size; j++)

{ char *val;

if (equals(attrs[best_attr], "Outlook")) val = data[j].Outlook;

else if (equals(attrs[best_attr], "Temperature")) val = data[j].Temperature;

else if (equals(attrs[best_attr], "Humidity")) val = data[j].Humidity;

else val = data[j].Windy;

if (equals(values[i], val))

subset[subset_size++] = data[j];

}

char *remaining_attrs[MAX_ATTR];

int new_count = 0;

for (int k = 0; k < attr_count; k++)

if (k != best_attr)

remaining_attrs[new_count++] = attrs[k];

node->children[node->branch_count] = build_tree(subset, subset_size, remaining_attrs, new_count);

strcpy(node->branch_values[node->branch_count++], values[i]);

}

return node;

}

4.结果呈现与模型应用

当决策树在内存中构建完成后,需要将其以人类可读的方式展示出来,并应用到实际的分类任务中。

(1)可视化打印决策树 print_tree()

为了直观地展示树的结构,print_tree() 函数同样采用了递归的方式。它接收一个节点和当前的层级深度 level 作为参数。通过在打印前输出 level 数量的空格,实现了层次化的缩进效果。它会先打印当前节点的信息,然后遍历其所有子节点,并对每个子节点增加缩进深度后进行递归调用。

void print_tree(Node *node, int level)

{ if (node->is_leaf)

{ for (int i = 0; i < level; i++) printf(" ");

printf("Leaf: %s

", node->label);

return;

}

for (int i = 0; i < level; i++) printf(" ");

printf("Node: %s

", node->attribute);

for (int i = 0; i < node->branch_count; i++)

{ for (int j = 0; j < level; j++) printf(" ");

printf(" [%s = %s]

", node->attribute, node->branch_values[i]);

print_tree(node->children[i], level + 2);

}

}

(2)分类预测函数 predict()

predict() 函数模拟了使用决策树进行分类的完整过程。它接收树的根节点和一个待预测的新样本 sample。它会从根节点开始,判断当前节点的类型:

· 如果是叶节点,直接返回其标签作为预测结果。

· 如果是分裂节点,则根据该节点的分裂属性(如 "Outlook"),从输入样本中提取对应的属性值(如 "sunny")。然后,遍历该节点的所有分支,寻找与该属性值匹配的分支,并沿着该分支递归调用 predict() 函数,进入下一层级的决策,直到最终到达一个叶节点。

const char *predict(Node *tree, Sample *sample)

{ if (tree->is_leaf) return tree->label;

const char *val;

if (equals(tree->attribute, "Outlook")) val = sample->Outlook;

else if (equals(tree->attribute, "Temperature")) val = sample->Temperature;

else if (equals(tree->attribute, "Humidity")) val = sample->Humidity;

else val = sample->Windy;

for (int i = 0; i < tree->branch_count; i++)

if (equals(tree->branch_values[i], val))

return predict(tree->children[i], sample);

return "Unknown";

}

(3)决策规则提取 extract_rules()

为了让模型的决策逻辑更加透明,extract_rules() 函数可以将树结构“翻译”成一系列 "IF ... AND ... THEN ..." 格式的规则。它通过递归遍历树的所有路径,在路径上不断拼接条件,当到达一个叶节点时,就形成了一条完整的规则并打印输出

void extract_rules(Node *tree, char *current_rule, int *rule_index)

{ if (tree->is_leaf)

{ printf("规则 %d: %s => %s

", (*rule_index)++, current_rule, tree->label);

return;

}

for (int i = 0; i < tree->branch_count; i++)

{ char new_rule[MAX_RULE_LEN];

if (strlen(current_rule) == 0)

snprintf(new_rule, sizeof(new_rule), "%s=%s", tree->attribute, tree->branch_values[i]);

else

snprintf(new_rule, sizeof(new_rule), "%s AND %s=%s", current_rule, tree->attribute, tree->branch_values[i]);

extract_rules(tree->children[i], new_rule, rule_index);

}

}

5.主程序入口与流程调度

main() 函数是整个实验的总指挥,它按照逻辑顺序调用之前定义的所有模块,完成从数据加载到结果展示的完整流程。

(1)初始化与数据展示:首先打印整个原始数据集,方便对照。

(2)信息增益率计算:循环遍历所有属性,调用 info_gain()(带细节打印)和 gain_ratio(),详细展示每个属性的熵、信息增益和信息增益率的计算过程。

(3)根节点选择:再次计算所有属性的增益率,选出最优者作为树的根节点并打印。

(4)构建并打印树:调用 build_tree() 构建决策树,然后调用 print_tree() 将其可视化输出。

(5)模型应用与评估:定义一个包含新样本的测试集,循环调用 predict() 函数进行预测并打印结果。同时,用原始训练集来测试模型,计算并输出其准确率。

(6)规则提取:最后调用 extract_rules(),将训练好的模型转化为一系列清晰的决策规则。

int main()

{ printf("原始数据:

");

printf("========================================

");

printf(" %-10s %-12s %-10s %-7s %-5s

", "Outlook", "Temperature", "Humidity", "Windy", "Play?");

printf("----------------------------------------

");

for (int i = 0; i < total_samples; i++)

printf(" %-10s %-12s %-10s %-7s %-5s

",

data[i].Outlook, data[i].Temperature, data[i].Humidity, data[i].Windy, data[i].Play);

printf("

================================================================================

");

printf("2. 计算各属性的信息增益率与熵:

");

printf("--------------------------------------------------------------------------------

");

double total_entropy = entropy(data, total_samples);

printf("数据集总熵: %.4f

", total_entropy);

for (int i = 0; i < attr_count; i++)

{ double ig = info_gain(data, total_samples, attributes[i], 1);

double si = split_info(data, total_samples, attributes[i]);

double gr = (si == 0) ? 0 : ig / si;

printf("%s:

", attributes[i]);

printf(" 信息增益(Info Gain): %.4f

", ig);

printf(" 分裂信息(Split Info): %.4f

", si);

printf(" 信息增益率(Gain Ratio): %.4f

", gr);

}

printf("================================================================================

");

printf("3. 选择根节点分裂属性:

");

printf("--------------------------------------------------------------------------------

");

double best_gr = -1;

int best_index = -1;

for (int i = 0; i < attr_count; i++)

{ double gr = gain_ratio(data, total_samples, attributes[i]);

if (gr > best_gr)

{ best_gr = gr;

best_index = i;

}

}

printf("最佳分裂属性: %s

信息增益率: %.4f

", attributes[best_index], best_gr);

printf("

================================================================================

");

printf("4. 建立决策树:

");

printf("--------------------------------------------------------------------------------

");

Node *tree = build_tree(data, total_samples, attributes, attr_count);

printf("决策树结构:

");

print_tree(tree, 0);

printf("================================================================================

");

printf("5. 对新输入进行分类预测:

");

printf("--------------------------------------------------------------------------------

");

Sample tests[] =

{ {"sunny", "cool", "high", "true", ""},

{"overcast", "hot", "normal", "false", ""},

{"rain", "mild", "high", "false", ""},

{"sunny", "mild", "normal", "false", ""},

{"rain", "cool", "normal", "true", ""}

};

int test_count = 5;

for (int i = 0; i < test_count; i++)

{ const char *pred = predict(tree, &tests[i]);

printf("测试样本 %d:

", i + 1);

printf(" 输入: Outlook=%-8s Temperature=%-6s Humidity=%-8s Windy=%-5s

",

tests[i].Outlook, tests[i].Temperature, tests[i].Humidity, tests[i].Windy);

printf(" 预测结果: %s

", pred);

}

printf("================================================================================

");

printf("训练集预测准确率:

");

printf("--------------------------------------------------------------------------------

");

int correct = 0;

for (int i = 0; i < total_samples; i++)

{ const char *pred = predict(tree, &data[i]);

if (equals(pred, data[i].Play)) correct++;

}

double acc = (double)correct / total_samples * 100.0;

printf("准确率: %d/%d = %.2f%%

", correct, total_samples, acc);

printf("================================================================================

");

printf("6. 提取决策规则:

");

printf("--------------------------------------------------------------------------------

");

int rule_index = 1;

extract_rules(tree, "", &rule_index);

printf("================================================================================

");

return 0;

}

4 最终代码

#include

#include

#include

#include

#define MAX_SAMPLES 20

#define MAX_ATTR 5

#define MAX_VALUE_LEN 20

#define MAX_RULE_LEN 256

#define MAX_RULES 50

//一、结构体定义

typedef struct Node

{ char attribute[MAX_VALUE_LEN];

char label[MAX_VALUE_LEN];

int is_leaf;

int branch_count;

char branch_values[MAX_SAMPLES][MAX_VALUE_LEN];

struct Node *children[MAX_SAMPLES];

} Node;

typedef struct

{ char Outlook[MAX_VALUE_LEN];

char Temperature[MAX_VALUE_LEN];

char Humidity[MAX_VALUE_LEN];

char Windy[MAX_VALUE_LEN];

char Play[MAX_VALUE_LEN];

} Sample;

// 二、训练数据集定义

Sample data[MAX_SAMPLES] =

{ {"sunny", "hot", "high", "false", "No"},

{"sunny", "hot", "high", "true", "No"},

{"overcast", "hot", "high", "false", "Yes"},

{"rain", "mild", "high", "false", "Yes"},

{"rain", "cool", "normal", "false", "Yes"},

{"rain", "cool", "normal", "true", "No"},

{"overcast", "cool", "normal", "true", "Yes"},

{"sunny", "mild", "high", "false", "No"},

{"sunny", "cool", "normal", "false", "Yes"},

{"rain", "mild", "normal", "false", "Yes"},

{"sunny", "mild", "normal", "true", "Yes"},

{"overcast", "mild", "high", "true", "Yes"},

{"overcast", "hot", "normal", "false", "Yes"},

{"rain", "mild", "high", "true", "No"}

};

int total_samples = 14;

char *attributes[] = {"Outlook", "Temperature", "Humidity", "Windy"};

int attr_count = 4;

//三、工具函数定义

int equals(const char *a, const char *b)

{ return strcmp(a, b) == 0;

}

/* ---------- 计算样本集合的熵 ---------- */

double entropy(Sample *data, int size)

{ if (size == 0) return 0.0;

int count_yes = 0, count_no = 0;

for (int i = 0; i < size; i++)

{ if (equals(data[i].Play, "Yes")) count_yes++;

else count_no++;

}

double p1 = (double)count_yes / size, p2 = (double)count_no / size;

double e = 0.0;

if (p1 > 0) e -= p1 * log2(p1);

if (p2 > 0) e -= p2 * log2(p2);

return e;

}

/* ---------- 计算分裂信息(Split Info) ---------- */

double split_info(Sample *data, int size, char *attr)

{ if (size == 0) return 0;

char values[MAX_SAMPLES][MAX_VALUE_LEN];

int counts[MAX_SAMPLES] = {0}, value_count = 0;

for (int i = 0; i < size; i++)

{ char *val;

if (equals(attr, "Outlook")) val = data[i].Outlook;

else if (equals(attr, "Temperature")) val = data[i].Temperature;

else if (equals(attr, "Humidity")) val = data[i].Humidity;

else val = data[i].Windy;

int found = 0;

for (int j = 0; j < value_count; j++)

if (equals(values[j], val))

{ counts[j]++;

found = 1;

break;

}

if (!found)

{ strcpy(values[value_count], val);

counts[value_count++] = 1;

}

}

double si = 0;

for (int i = 0; i < value_count; i++)

{ double p = (double)counts[i] / size;

si -= p * log2(p);

}

return si;

}

/* ---------- 计算信息增益(Info Gain) ---------- */

double info_gain(Sample *data, int size, char *attr, int print_detail)

{ double total_entropy = entropy(data, size);

char values[MAX_SAMPLES][MAX_VALUE_LEN];

int value_count = 0;

for (int i = 0; i < size; i++)

{ char *val;

if (equals(attr, "Outlook")) val = data[i].Outlook;

else if (equals(attr, "Temperature")) val = data[i].Temperature;

else if (equals(attr, "Humidity")) val = data[i].Humidity;

else val = data[i].Windy;

int found = 0;

for (int j = 0; j < value_count; j++)

if (equals(values[j], val))

{ found = 1;

break;

}

if (!found) strcpy(values[value_count++], val);

}

double weighted_entropy = 0;

if (print_detail)

printf(" 子集熵计算:

");

for (int i = 0; i < value_count; i++)

{ Sample subset[MAX_SAMPLES];

int subset_size = 0;

for (int j = 0; j < size; j++)

{ char *val;

if (equals(attr, "Outlook")) val = data[j].Outlook;

else if (equals(attr, "Temperature")) val = data[j].Temperature;

else if (equals(attr, "Humidity")) val = data[j].Humidity;

else val = data[j].Windy;

if (equals(val, values[i]))

subset[subset_size++] = data[j];

}

double subset_entropy = entropy(subset, subset_size);

double p = (double)subset_size / size;

weighted_entropy += p * subset_entropy;

if (print_detail)

printf(" %-10s: 熵=%.4f, 权重=%.2f, 贡献=%.4f

",

values[i], subset_entropy, p, p * subset_entropy);

}

if (print_detail)

{ printf(" Info_%s(D) = %.4f

", attr, weighted_entropy);

printf(" 信息增益计算: %.4f - %.4f = %.4f

",

total_entropy, weighted_entropy, total_entropy - weighted_entropy);

}

return total_entropy - weighted_entropy;

}

/* ---------- 信息增益率 ---------- */

double gain_ratio(Sample *data, int size, char *attr)

{ double ig = info_gain(data, size, attr, 0);

double si = split_info(data, size, attr);

return (si == 0) ? 0 : ig / si;

}

//四、决策树构建

int all_same_label(Sample *data, int size)

{ for (int i = 1; i < size; i++)

if (!equals(data[0].Play, data[i].Play))

return 0;

return 1;

}

const char *majority_label(Sample *data, int size)

{ int count_yes = 0;

for (int i = 0; i < size; i++)

if (equals(data[i].Play, "Yes"))

count_yes++;

return (count_yes >= size - count_yes) ? "Yes" : "No";

}

Node *build_tree(Sample *data, int size, char **attrs, int attr_count)

{ Node *node = (Node *)malloc(sizeof(Node));

memset(node, 0, sizeof(Node));

if (all_same_label(data, size) || attr_count == 0)

{ node->is_leaf = 1;

strcpy(node->label, all_same_label(data, size) ? data[0].Play : majority_label(data, size));

return node;

}

double best_gr = -1;

int best_attr = -1;

for (int i = 0; i < attr_count; i++)

{ double gr = gain_ratio(data, size, attrs[i]);

if (gr > best_gr)

{ best_gr = gr;

best_attr = i;

}

}

strcpy(node->attribute, attrs[best_attr]);

node->is_leaf = 0;

char values[MAX_SAMPLES][MAX_VALUE_LEN];

int value_count = 0;

for (int i = 0; i < size; i++)

{ char *val;

if (equals(attrs[best_attr], "Outlook")) val = data[i].Outlook;

else if (equals(attrs[best_attr], "Temperature")) val = data[i].Temperature;

else if (equals(attrs[best_attr], "Humidity")) val = data[i].Humidity;

else val = data[i].Windy;

int found = 0;

for (int j = 0; j < value_count; j++)

if (equals(values[j], val))

{ found = 1;

break;

}

if (!found) strcpy(values[value_count++], val);

}

for (int i = 0; i < value_count; i++)

{ Sample subset[MAX_SAMPLES];

int subset_size = 0;

for (int j = 0; j < size; j++)

{ char *val;

if (equals(attrs[best_attr], "Outlook")) val = data[j].Outlook;

else if (equals(attrs[best_attr], "Temperature")) val = data[j].Temperature;

else if (equals(attrs[best_attr], "Humidity")) val = data[j].Humidity;

else val = data[j].Windy;

if (equals(values[i], val))

subset[subset_size++] = data[j];

}

char *remaining_attrs[MAX_ATTR];

int new_count = 0;

for (int k = 0; k < attr_count; k++)

if (k != best_attr)

remaining_attrs[new_count++] = attrs[k];

node->children[node->branch_count] = build_tree(subset, subset_size, remaining_attrs, new_count);

strcpy(node->branch_values[node->branch_count++], values[i]);

}

return node;

}

//五、打印与预测函数

void print_tree(Node *node, int level)

{ if (node->is_leaf)

{ for (int i = 0; i < level; i++) printf(" ");

printf("Leaf: %s

", node->label);

return;

}

for (int i = 0; i < level; i++) printf(" ");

printf("Node: %s

", node->attribute);

for (int i = 0; i < node->branch_count; i++)

{ for (int j = 0; j < level; j++) printf(" ");

printf(" [%s = %s]

", node->attribute, node->branch_values[i]);

print_tree(node->children[i], level + 2);

}

}

const char *predict(Node *tree, Sample *sample)

{ if (tree->is_leaf) return tree->label;

const char *val;

if (equals(tree->attribute, "Outlook")) val = sample->Outlook;

else if (equals(tree->attribute, "Temperature")) val = sample->Temperature;

else if (equals(tree->attribute, "Humidity")) val = sample->Humidity;

else val = sample->Windy;

for (int i = 0; i < tree->branch_count; i++)

if (equals(tree->branch_values[i], val))

return predict(tree->children[i], sample);

return "Unknown";

}

//六、提取决策规则

void extract_rules(Node *tree, char *current_rule, int *rule_index)

{ if (tree->is_leaf)

{ printf("规则 %d: %s => %s

", (*rule_index)++, current_rule, tree->label);

return;

}

for (int i = 0; i < tree->branch_count; i++)

{ char new_rule[MAX_RULE_LEN];

if (strlen(current_rule) == 0)

snprintf(new_rule, sizeof(new_rule), "%s=%s", tree->attribute, tree->branch_values[i]);

else

snprintf(new_rule, sizeof(new_rule), "%s AND %s=%s", current_rule, tree->attribute, tree->branch_values[i]);

extract_rules(tree->children[i], new_rule, rule_index);

}

}

//七、主程序入口

int main()

{ printf("原始数据:

");

printf("========================================

");

printf(" %-10s %-12s %-10s %-7s %-5s

", "Outlook", "Temperature", "Humidity", "Windy", "Play?");

printf("----------------------------------------

");

for (int i = 0; i < total_samples; i++)

printf(" %-10s %-12s %-10s %-7s %-5s

",

data[i].Outlook, data[i].Temperature, data[i].Humidity, data[i].Windy, data[i].Play);

printf("

================================================================================

");

printf("2. 计算各属性的信息增益率与熵:

");

printf("--------------------------------------------------------------------------------

");

double total_entropy = entropy(data, total_samples);

printf("数据集总熵: %.4f

", total_entropy);

for (int i = 0; i < attr_count; i++)

{ double ig = info_gain(data, total_samples, attributes[i], 1);

double si = split_info(data, total_samples, attributes[i]);

double gr = (si == 0) ? 0 : ig / si;

printf("%s:

", attributes[i]);

printf(" 信息增益(Info Gain): %.4f

", ig);

printf(" 分裂信息(Split Info): %.4f

", si);

printf(" 信息增益率(Gain Ratio): %.4f

", gr);

}

printf("================================================================================

");

printf("3. 选择根节点分裂属性:

");

printf("--------------------------------------------------------------------------------

");

double best_gr = -1;

int best_index = -1;

for (int i = 0; i < attr_count; i++)

{ double gr = gain_ratio(data, total_samples, attributes[i]);

if (gr > best_gr)

{ best_gr = gr;

best_index = i;

}

}

printf("最佳分裂属性: %s

信息增益率: %.4f

", attributes[best_index], best_gr);

printf("

================================================================================

");

printf("4. 建立决策树:

");

printf("--------------------------------------------------------------------------------

");

Node *tree = build_tree(data, total_samples, attributes, attr_count);

printf("决策树结构:

");

print_tree(tree, 0);

printf("================================================================================

");

printf("5. 对新输入进行分类预测:

");

printf("--------------------------------------------------------------------------------

");

Sample tests[] =

{ {"sunny", "cool", "high", "true", ""},

{"overcast", "hot", "normal", "false", ""},

{"rain", "mild", "high", "false", ""},

{"sunny", "mild", "normal", "false", ""},

{"rain", "cool", "normal", "true", ""}

};

int test_count = 5;

for (int i = 0; i < test_count; i++)

{ const char *pred = predict(tree, &tests[i]);

printf("测试样本 %d:

", i + 1);

printf(" 输入: Outlook=%-8s Temperature=%-6s Humidity=%-8s Windy=%-5s

",

tests[i].Outlook, tests[i].Temperature, tests[i].Humidity, tests[i].Windy);

printf(" 预测结果: %s

", pred);

}

printf("================================================================================

");

printf("训练集预测准确率:

");

printf("--------------------------------------------------------------------------------

");

int correct = 0;

for (int i = 0; i < total_samples; i++)

{ const char *pred = predict(tree, &data[i]);

if (equals(pred, data[i].Play)) correct++;

}

double acc = (double)correct / total_samples * 100.0;

printf("准确率: %d/%d = %.2f%%

", correct, total_samples, acc);

printf("================================================================================

");

printf("6. 提取决策规则:

");

printf("--------------------------------------------------------------------------------

");

int rule_index = 1;

extract_rules(tree, "", &rule_index);

printf("================================================================================

");

return 0;

}